Last Update: October 3, 2021

BY eric

eric

Keywords

Overview

People keep talking about how good kubernetes is. OKay, I get it, and I would love to learn how to use kubernetes for my app / service deployment, for my work or for my clients. Using kubernetes for scalable NGINX web service deployment could be a very good user case. However, is it necessary to read through all the documentation for just deploying a simple NGINX web service? From a developer's prospective, we don't have to, right? All I need is just a simple tutorial I can find from the Internet.

However, It turned out that it is not that simple as it may seem. Not like other configurations such as LAMP or MEAN app development stack, which has tons of tutorials to show you to configure the development / production environment properly. In comparison, it is hard to search up some useful materials for demonstrating a simple NGINX deployment with kubernetes. Google mostly returns documentation by the kubernetes service providers no matter how you choose the search keywords. Even after reading enough of those kinds of docs, there is still no guarantee that things will just work as expected. A kubernetes expert can certainly make things work easily, but I don't want to be a kubernetes expert now, at least not just yet, as at the moment my main job is to develop apps.

So I gave myself a crash course about kubernetes, and started a journey of my NGINX service deployment testing on AWS with kubernetes. During this period of time, I struggled a lot, and had gone through tons of troubles, experienced so much frustration and desperation just trying to get things to work. And finally, I did it, and the kubernetes cluster started to listen on port 80, serving web pages happily ever after. It didn't happen, initially. At a point, I wanted to give up for good, and it seemed that it was an impossible job without becoming a kubernetes expert first.

In this article, I will try to write down the key points of setting up a kubernetes ingress controller to make the cluster listening on port 80. It won't be a complete tutorial for deploying a NGINX web service with kubernetes, however you can refer to some great articles I found which are listed in the "references" section.

Kubernetes Basics

Kubernets Units / Formation

- Web, a website we make (with html, php, python, java, nodejs, etc. ...) to provide information or service to users

- Image, a batch of files containing from the lowest level of toolsets or programs (nginx, tomcat, etc. ...), to the application files

- Container, a unit that starts from an image by a container engine

- Pod, where the container(s) are in

- Node, where the pod(s) are in

- Services, how apps can be exposed

The Goal of Making Kubernetes listening on port 80

A kubernetes cluster as a whole is an isolated environment, for the security reason or others, anything inside the cluster is not exposed to the external world unless you configure it to. In this article, I will try to explain how to configure ingress controller to make the cluster listening on port 80.

Testing Environment

Create a Kubernetes Cluster

- Starts a few VPS from any of cloud service provider (Google cloud, AWS, Digital Oceans) or any linux boxes (realy physical machines such as laptops, workstations, etc.).

- Install "kubectl", "kubelet", "kuberadm" on them

- On the master machine do "kubectl init", and on other machines do "kubectl join"

You might experience kubelet starting up error, changing the docker's cgroupdriver to systemd may help.

Buiding NGINX web service image

We need to create an image of the web service for the pod deployment.

Creating a NGINX Pod / Deployment

Now create a NGINX deployment file nginx-deployment.yaml, with the following content:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 1

strategy:

type: Recreate

rollingUpdate: null

template:

metadata:

creationTimestamp: null

spec:

hostname: nginx

containers:

# change the image to your own image here

- image: tyolab/web:latest

name: nginx

ports:

- containerPort: 80

- containerPort: 443

imagePullPolicy: Always

resources: {}

status: {}

To deploy, run

kubectl apply -f nginx-deployment.yaml

Creating a NGINX Service

Create a nginx service file: nginx-service.yaml, with the following content:

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

ports:

- name: "80"

port: 80

targetPort: 80

- name: "443"

port: 443

targetPort: 443

type: LoadBalancer

status:

loadBalancer: {}

To start the service, run

kubectl apply -f nginx-service.yaml

If a service is not started, the cluster's DNS won't be able to resolve the pod / container name if you try to access it from a different pod.

Ingress Controller Installation

Now this is the main part, which is very important because it may confuse you. Why it doesn't just work yet even we have it installed, and we have the deployment and the service, what now? There is no process listening on port 80. When we try to connect to it telnet (cluster's external IP from any of the nodes) 80, it simply says "connection refused". Or do we install the wrong version?

To check if there is any process listening on port 80, we can use the following command from any of the nodes:

lsof -i :80

Anyway, let's just install it first, and we should use the bare metal version.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.0.4/deploy/static/provider/baremetal/deploy.yaml

At the time of writing, the latest version of ingress controller is 1.0.4, so you may just change the version number to the latest in the url to suit your need.

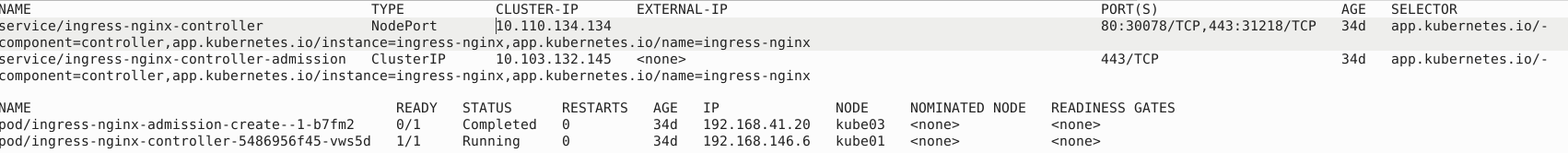

After the installation, we can check the pods and services related to the ingress controller by running the following command:

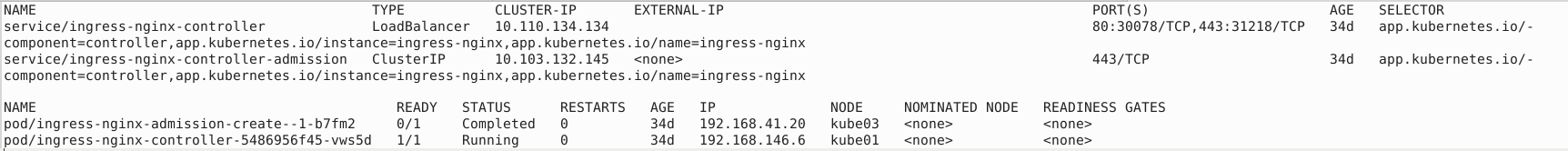

kubectl get svc,pods -n ingress-nginx --output=wide

It will show the results similar as below:

As you can see, the EXTERNAL-IP of service/ingress-nginx-controller is empty, which means the ingress controller is not able to listen on port 80 or 443 yet, because there is no IP address(es) to bind to.

Also, by default, the service type of ingress nginx controller is NodePort, but we can change it to LoadBalancer by running the following:

kubectl patch svc ingress-nginx-controller -n ingress-nginx -p '{"spec": {"type": "LoadBalancer"}}'

The results will look like this:

I am not entirely sure whether the above step is nessary, but it won't hurt to change the service type to LoadBalancer anyway. If you decide to skip this step and find out the port 80 is not working, you can always change the service type to LoadBalancer easily with the above command.

Now it comes to the most important step of all, which is to create an IP patch file for the ingress controller, ip-patch.yaml, with the content like the following:

spec:

externalIPs:

- 172.31.43.69

- 172.31.32.123

- 172.31.40.142

- 172.31.37.82

The above is just an example, please feel free to change it with your own IP addresses depending on the size of your cluster, or the number of machines you would like to expose.

After making necessary changes with the appropriate IP addresses, we can apply the patch file to the ingress controller by running the following command:

kubectl patch service ingress-nginx-controller -n ingress-nginx --patch "$(cat ip-patch.yaml)"

This is the moment that the miracle happens. Voilà. As soon as the external IP addresses are assigned, the ingress nginx controller will try to bind them, and start a process on each node to listen on port 80 and 443. Those IP addresses are the actual IPs (private or public) of kubernetes nodes including the master one, but we better off leave the master node out of it. Please be noted that for those who are using a cloud service provider to build the cluster, the actual IP address is not the public IP address of the cluster node. The public IP is just the address assigned to the virtual machine instance (such as EC2 (AWS), Compute Engine (Google) instance, etc.) for the external access from the outside world; it is the address for routing, and is not the address ingress controller has control of.

Creating a NGINX Ingress

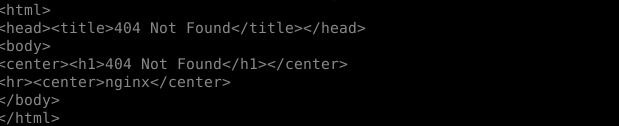

Now, the cluster is listening on port 80, and any node with the IP address provided in the above step will respond to the request from port 80 and 443. let's try to access it by using the public IP address of any exposed cluster node.

curl [public IP of any node]

Here is what happens:

It seems bad, but we know that the ingress controller is working. And we haven't finished the work yet. Now the ingress controller is guarding on port 80, but it is not able to serve the traffic yet because it doesn't know which service in the cluster should be handling the request.

Now, we create a nginx ingress file: nginx-ingress.yaml, with the following content:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: "nginx"

# default backend is optional, if the ingress is not working, try to set it

defaultBackend:

service:

# name of the service

name: nginx

port:

number: 80

rules:

- http:

paths:

- backend:

service:

# name of the service

name: nginx

port:

number: 80

path: /

pathType: Prefix

# Optional, the host is domain we want the service to respond to

# if not set, the service will respond to all hosts

# host: www.tyolab.com

Please be aware the name of the backend is the name of the service that you want the ingress controller to route the traffice to. This step is similar to that of setting an upstream section of a normal NGINX configuration.

To start the ingress, run

kubectl apply -f nginx-service.yaml

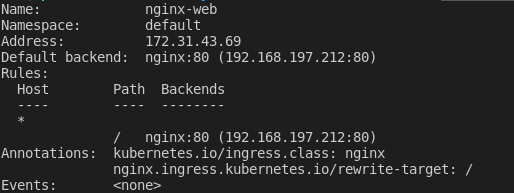

It is recommended to check the details of the ingress status by running the following command:

kubectl describe ing nginx-web

And you can see something similar to the following:

This is the final step of all, now the ingress controller is able to serve the traffic properly.

For Debugging

In some cases, things don't work as expected, no matter how certain you are that you do each step correctly. So we can take some measures to debug the issue.

There are two most likely reasons why the whole setting is not working:

- The service name is not resolved properly

- The web service container is not running properly

To test the above, we can run a busybox container in the cluster, and then test the service(s) to see they behave properly.

kubectl run -it --rm test --image=busybox --restart=Never -- sh

Now do some testing, first of all, we need to make sure the service name is resolved properly.

If you don't see a command prompt, try pressing enter.

/ # nslookup web3

Server: 10.96.0.10

Address: 10.96.0.10:53

** server can't find web3.default.svc.cluster.local: NXDOMAIN

*** Can't find web3.svc.cluster.local: No answer

*** Can't find web3.cluster.local: No answer

*** Can't find web3.ap-east-1.compute.internal: No answer

*** Can't find web3.default.svc.cluster.local: No answer

*** Can't find web3.svc.cluster.local: No answer

*** Can't find web3.cluster.local: No answer

*** Can't find web3.ap-east-1.compute.internal: No answer

/ # nslookup nginx

Server: 10.96.0.10

Address: 10.96.0.10:53

*** Can't find nginx.svc.cluster.local: No answer

*** Can't find nginx.cluster.local: No answer

*** Can't find nginx.ap-east-1.compute.internal: No answer

*** Can't find nginx.default.svc.cluster.local: No answer

*** Can't find nginx.svc.cluster.local: No answer

*** Can't find nginx.cluster.local: No answer

*** Can't find nginx.ap-east-1.compute.internal: No answer

/ # ping web3

ping: bad address 'web3'

/ # ping nginx

PING nginx (10.111.38.72): 56 data bytes

^C

--- nginx ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

As you can see, using nslookup doesn't hep much, but we can ping the service name, and if we can get a IP address, it means the service name is resolved properly.

Now we test the web container:

/ # telnet nginx 80

Connected to nginx

get /

HTTP/1.1 400 Bad Request

Server: nginx/1.21.3

Date: Thu, 04 Nov 2021 01:37:44 GMT

Content-Type: text/html

Content-Length: 157

Connection: close

<html>

<head><title>400 Bad Request</title></head>

<body>

<center><h1>400 Bad Request</h1></center>

<hr /><center>nginx/1.21.3</center>

</body>

</html>

Connection closed by foreign host

From the output, we can see that the nginx container was responding even it gave a 400 error. The nginx may or may not be running properly depending on how the NGINX should behave normally if it is provided with a proper HTTP request.

References

During the whole process, I was lucky that I still managed to find some really useful materials shared by the kubernetes community.

- 1. Logging Into a Kubernetes Cluster With Kubectl

- 2. Accessing Kubernetes Pods from Outside of the Cluster

- 3. Kubernetes -Services, Port -Forwarding, Node Port, LoadBalancer and Ingress via MetalLB and Ngnx Ingress Controller

Credits

Photo by Rubaitul Azad on Unsplash

Previous Article

Mar 14, 2022

Using 'node-programmer' Package for Arguments Parsing

A simple tutorial to show you how to use 'node-programmer' package

Next Article

Jul 06, 2025

Let the Audio Speak: Using AI to Decode and Analyze Conversations at Scale

In this article, I will share my experience of using AI to transcribe and analyze audio files.